Adding fairness to dynamic pricing with Reinforcement Learning

Maximizing profit is a premise that has always being present in the back of the minds of every trader and salesman since the very early days of commerce; or so they thought. In reality, every actor in search for profit during an exchange of goods or services tends unconsciously to add some measure of fairness into the deal in order to cultivate the trust of a returning customer; the problem was that this trust was not being measure, but now Reinforcement Learning can help us gauge that.

A tradeoff between revenue and fairness

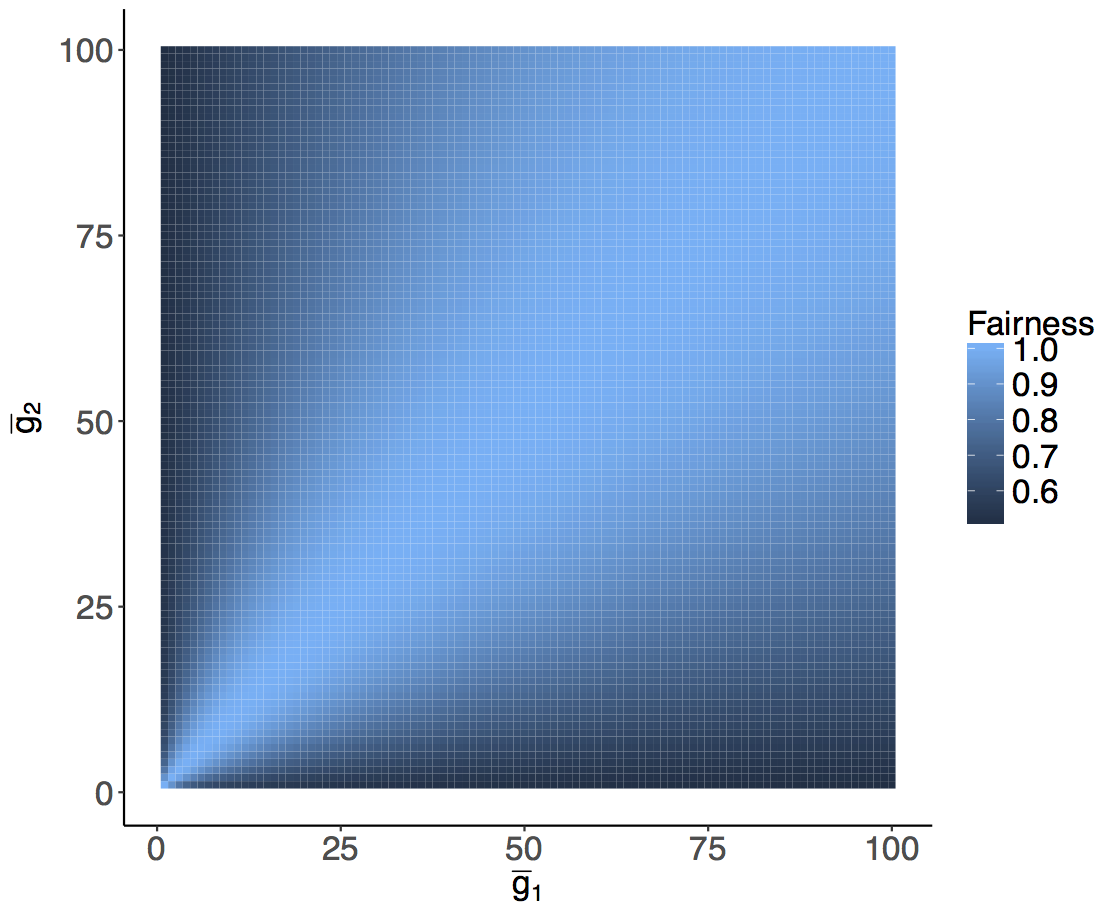

A group of scientists at BBVA Data & Analytics have just published a paper in which they explain how by using Reinforcement Learning they have been able to add in pricing policies a specific measure of fairness, thus increasing trust in how the bank engages with clients. The algorithm, that adjust in realtime to the conditions of the customers, not only considers maximization of profit on his calculations, but also a measure of fairness based on the definition given by the Jain’s index, in which the price setting policy is decided homogeneously for a heterogeneous number of groups.

The RL algorithm consider two expressions, one that tries to maximize returns in each group of customers and another one that tries to keep fairness at highest level possible across groups of buyers.

“We demonstrate that RL provides two main features to support fairness in dynamic pricing: on on the one hand, RL is able to learn from recent experience, adapting pricing policy to complex market environments; on the other hand, it integrates fairness in to the model’s core”, says one of the leading data scientist behind this research, Roberto Maestre.

Fairness Design Principles

Unfair pricing policies have been shown to be one of the most negative perceptions customers can have concerning pricing, and may result in long-term losses for a company. This is even more relevant in a world where information can be shared rapidly and evidence of different maximization in dynamic pricing is evident to consumers, but -until now- evidence of fairness was nowhere to be seen.

What RL help us do is to balance in realtime fairness and profit in a complex and fuzzy environment of price fluctuations and for a diverse range of financial products. RL is ideal for this kind of problem because it learns by trial and error while interacting with the environment, as opposed of learning with labeled data, i.e. not prior-knowledge about how environment works is necessary

“With this new algorithm we can have transparency on how a price are set and convey this measure of fairness to the customer, and therefore improving trust in our services”, says Maestre.

Data scientist at BBVA Data & Analytics run an experiment where they consider different behaviour depending of a pricing policy some of them are very sensitive to price change -with a very low probability of bid acceptance after a certain threshold-, while others don’t show a significant response to price changes and another one actually increases its probability of acceptance when price increases (much like the behaviour of buyers of luxury goods). In order to achieve both maximum revenue and fairness, the RL model has to resort to maximization of profit while keeping the price difference among groups to a minimum. This approach is able to eliminates bias in pricing policies as it applies fairness equally to all sets of clients considered (e.g.: by gender, race, income level, …).

Transparency and accountability

Despite the simplicity of the proposed environment, the paper itself effectively allows to open a rich discussion related to what is fair in pricing, starting with a specific way to measure it. Furthermore, this study enable us to benchmark the pricing policies achieved, in an open environments such The Turing Box. As long as these platforms allows anyone, not just computer scientists, to study AI; we will benefit from a valuable feedback

Roberto Maestre will be presenting the conclusions of his paper during the Intelligence Systems Conference in London this September.