Bringing the Artificial Intelligence to its True Potential

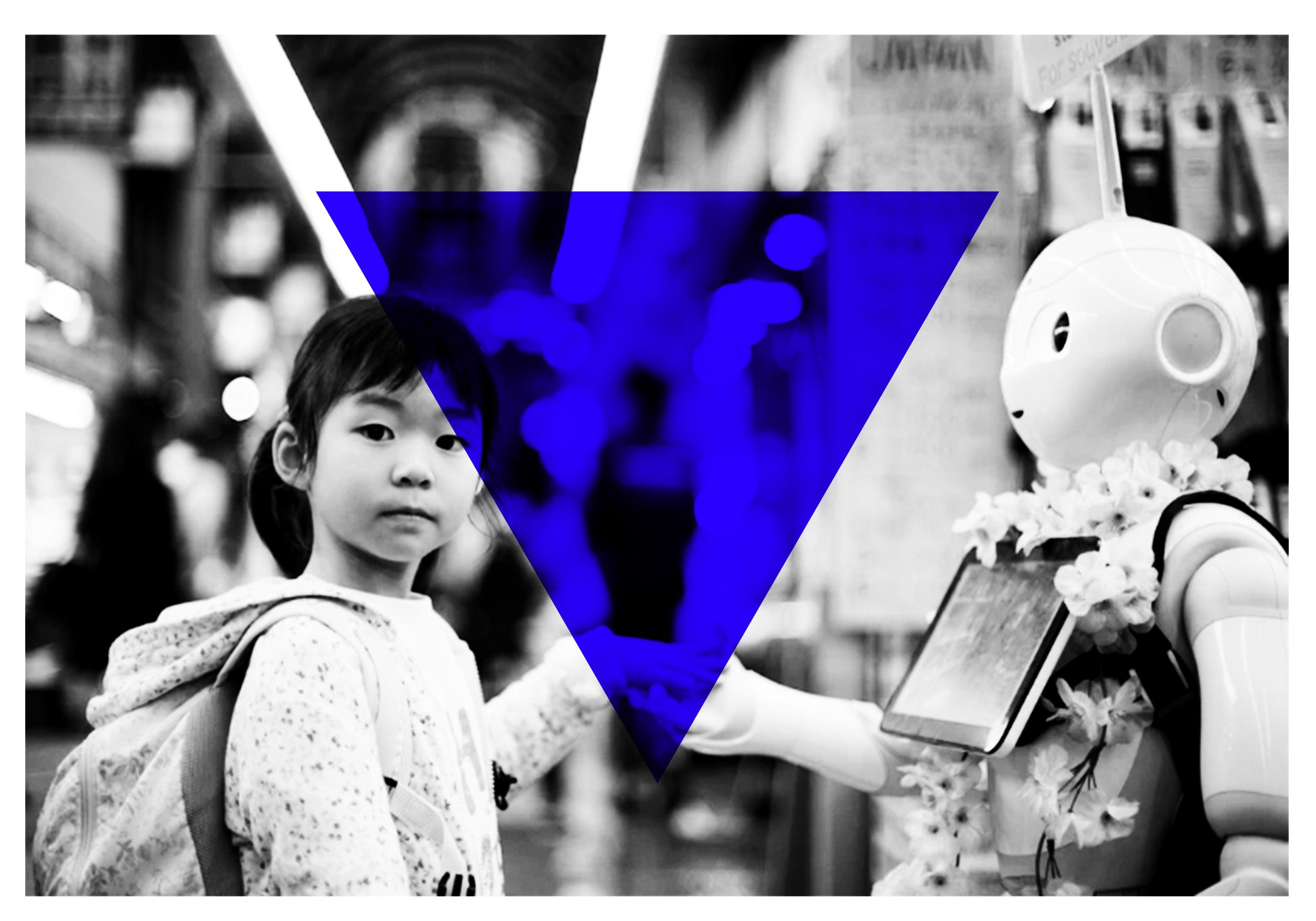

Since 1953, when the term Artificial Intelligence was coined by John McCarthy in a conference at Dartmouth University we have achieved unthinkable highs: we have taught machines to see, to recognize images or text. We have taught them to read, to listen, speak, or translate. And still they are not even close to having full human-capacities; they don’t comprehend, they don’t understand or learn further from the environment they are situated, just a mere concatenation of pre-program and statistical processes. They have unmatched computing power, but little to none true creativity; a superb ability to combine and analyze possibilities, but not a single spark of sudden self-consciousness. If someone tells you that the “singularity”, the moment in which Artificial Intelligence achieves a definitive human-like character and overpasses our own biological limits on intelligence, is around the corner, those projection are not well-rooted facts: the consensus among experts in that we are still decades away from a singularity point, and some think we might never get to a true human-like intelligence.

Regardless of the possibility of having to address machines as intellectually equal or superior, the revolution of AI is unstoppable in so many other ways. The amount of resources and talent devoted by the largest global corporations is accelerating a new industrial revolution in which the speed of adoption is increasingly fast than the pace of adaptation. The fact that increasingly complex automation is applied to problems that were before solved entirely by humans opens the door to great opportunities but also to fallouts and shortcomings. According to consulting firm Accenture, American companies are expected to invest 35 trillion dollars in cognitive technologies before 2035, and that does not take into account other big players, such as Europe, China or Japan. Governments, such the French, are recognizing the importance of AI for the economy and the society. In Spain, the Ministry of Digital Agenda (Minetad) has created a group of experts that is working on a White Paper on AI.

The fact that a increasingly more complex automation is applied to problems that not long ago were part of a human task opens the door to many opportunities, but also to risks, threats, and misunderstandings. In this scenario, we should be more concern about the human bias introduced in AI that in self-conscious killing machines. To avoid misuse, ethical norms have to be at the core of AI development and resolve questions such as “how” and “what for”.

An ethical development must help to fight biases and discrimination, provide transparency, audits of models and a strict respect for privacy. The new AI could be used to achieve the Sustainable Development Goals, as well as to win a war. The disparity of dilemmas that these technologies bring to the table is well beyond the engineering domain, even though knowledge of the intricacies of the technology is necessary to deconstruct the problem.

There are initiatives that are creating a trail of principles and rules, such as the 23 principles of Asilomar. Governments, again France is moving ahead rapidly, are suggesting the need for a global agreement on AI.

Dead by machines

The fact the AI can be used to make life and death decisions requires clear ethical norms that, in one hand, limit the possibility of AI negligence or weaponized AI (see Project Maven and Google). Intelligent machines are going to be embedded in every infrastructure, from air traffic, the electric grid or urban planning. The imminence of self-driving cars, trucks or passenger planes rises understandable public concern and requires solid safeguards to avoid hacking, unfair decision-making or proper response to unexpected events.

The government, civil society, academia, and businesses have to establish channels of communication to implement measures that reduce the impact and occurrence of errors. Furthermore, they have to create platforms in which to measure and correct algorithmic-based decision that perpetuates or widen inequality and promote biases. In this early stage of human-taught machines, there is a high possibility that machine learning designers transmit on purpose or inadvertently biases that make the society more unequal instead of moving us forward in the opposite direction.

To avoid misunderstandings measures oriented to increase transparency will be essential. The use of “black boxes” in the creation of machine learning models, could worsen if intelligent machines are trusted with making a decision. The ability of these machines to “explain” the reasoning behind such a decision will improve accountability, supervision and avoid giving full control to artificial agents of problems that affect people.

Unemployed by machines

The next challenge is how to integrate AI in a world where different levels of economic development, political priorities, and skilled workforces coexist. The G20 is already working on measures to mitigate the impact of work automation in developed countries and certain sectors that are likely to be highly automated in the next few decades. Ideas such as basic income or “taxing robots” should be, at least, considered by policymakers in order to correct extreme inequalities, and send big numbers of citizens to the fringes of society and without the resources to be incorporated in the AI economy.

As Economists Anton Korinek and Joseph Stiglitz put it: “innovation could lead to a few very rich individuals, whereas the vast majority of ordinary workers may be left behind, with wages far below what they were at the peak of the industrial age”.

Augmented by machines

But the creative potential of AI is much greater and offsets any other. The ability for humans to perform tasks more efficiently could equip workers with almost superhuman abilities to perform complex tasks, that take advantage of vasts amounts of information; for services and products personalized to a level that even the customer is unaware is possible.

An “augmented” AI worker can complete better distributed and fairer transactions or services, that could bring the world to a new era of development and inclusion. Not only tedious tasks will be eventually eliminated, humans will have more time to perform a more creative task, and reflect on the true purpose of new technologies.

Medical diagnostics could be almost flawless, the process of preventing or finding solutions in possible advert scenarios could be analyzed in record time. Education, healthcare or finance services (key components in an AI-driven economy) would become more accessible, even for those that have had unequal access up until today, because a personalized path for inclusion could be build for each one. At the same time, humans won’t have to be exposed to dangerous or eroding jobs, creating a services-only type of workforce.

The use of AI for social good help us identify hidden needs in vulnerable parts of the population, find insights in the way wealth is transmitted and circulates the economic system, fight corruption, fraud, and crime. AI can also facilitate channels for a more direct, participatory democracy, speed up bureaucratic processes, and improve budgetary prioritization. For all these goals to be achievable this AI tools should incorporate elements that are increasingly present in the debate of the development of automation: “Privacy by Design”, “Ethics by Design”, “Fairness by Design”, “Sustainable by Design”, “Transparent by Design”, etc.

In conclusion: the augmented capacities that AI is going to add to our capacity to process information and make decisions should improve our lives in general, should eliminate tedious tasks in the workplace, will increase productivity in the medium to long run, and, most likely, will free us to undertake more creative tasks or simply to reduce the workload per capita. The shortcomings that could be originated during this complex transition have to be tackled for perspectives not uniquely technological but from a socioeconomic or ethical point of view. The design of effective political frameworks and accountability should be a necessary component if we want to extract the full potential of AI technologies.