No Problem Too Big; No Solution Too (Computationally) Small

At BBVA Data & Analytics we are constantly tackling business problems with applied maths, statistics or econometrics. There is no problem too big; but it turns out the solution can be sometimes too big. That premise took BBVA’s data scientist Jordi Nin and Jordi Aranda to explore a way to improve the quality of the insights offered by Commerce 360, an app offer to SME’s to analyze their business ecosystem and purchase patterns.

What Nin and Aranda were trying to work out was a system in which you can provide the most disaggregated information possible about similar and surrounding businesses without violating the privacy rules of any of the elements of the set and subset. This would have allowed businesses to compare themselves against the competition without violating the privacy that every customer at BBVA must have protected.

Nin and Aranda, that worked together with mathematician Javier Herranz of Universitat Politècnica de Catalunya on an algorithm solution to this problem in order to add more flexibility to the way data is used by small businesses, thus making it much more meaningful. “We created a beautiful and detailed mathematical formulation to this problem”, explains Nin from BBVA Data & Analytics’ sunny offices in downtown Barcelona. The approach consisted in two phases: one that creates all the forbidden subsets of businesses, because they don’t, individually, satisfy a specific privacy condition. Later on, an online phase, which is run for each new query, validates if the requested aggregate information can be safely released, taking into account all the previous accepted queries.

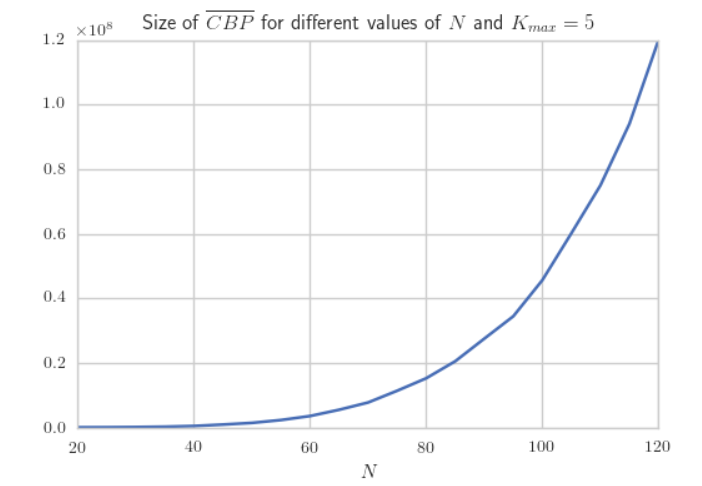

The problem is that with such a vast universe of business data and a formulation with exponential running time, checking individually for privacy conditions of each element of a set to create a subset of valid queries has a running time of O (2n). So the size of subset that defines the privacy conditions will make processing times almost impossible to handle unless quantum computing becomes a reality.

What scientist found is that in order to prevent non-aggregate companies information as required by privacy laws and at the same time allow fine search on the competition is almost an NP problem for the universe of data we are using, therefore needing such a humongous computational processing time that is not feasible nowadays.

“Sharing aggregated information in a private manner is a significant and challenging problem in data-based products and applications. Although it is possible to increase the performance of the proposed algorithms, in the near future we would like to study alternative ways to reduce the cost of computing the list of forbidden subsets”, says Nin.

The conclusion of this experimental work on how to tackle extremely challenging computational problems is being published after the peer-reviewed process in Proceedings of COMPSAC 2018 and will be presented this July in Tokyo (Japan) during the 42nd IEEE conference on Computer, Software and Applications.