It is Not About Deep Learning, But Learning How to Represent

Recently, we setup a workgroup dedicated to Deep Learning (DL). Workgroups offer opportunities to share internally ideas, concepts, resources, code, etc. Additionally, they are meant to promote the use of Machine Learning at BBVA. I remember vividly how José Antonio Rodríguez, one of the impellers of this workgroup, told us back then: “We should call it the workgroup on representation learning, rather than on deep learning”. Today, I am convinced his perspective was correct: a crucial aspect of DL is that it helps make better representations (abstractions) of nature -and business, for that matter.

So, given all the hype that is surrounding DL these days, I thought it would be timely to reflect and think about what DL has actually brought to the Machine Learning community, and separate the wheat from the chaff. In this post, I will try to highlight the importance of representation learning for the development of Artificial Intelligence, and describe the role of DL as a representation learning framework.

Representing Objects and Concepts

One of the fundamental problems in Machine Learning is how a machine should represent an object/concept. Machines only understand numbers, so we need to find a way to condensate all the information about an object in a set of numbers (i.e. a vector). Roughly speaking, the vector would be the representation -I’ll be more precise later on-. Such representation can later be used to perform different tasks; whether we want to classify the object, generate new ones or do anything else, it’s not important for our discussion. In any case, we will need a proper representation.

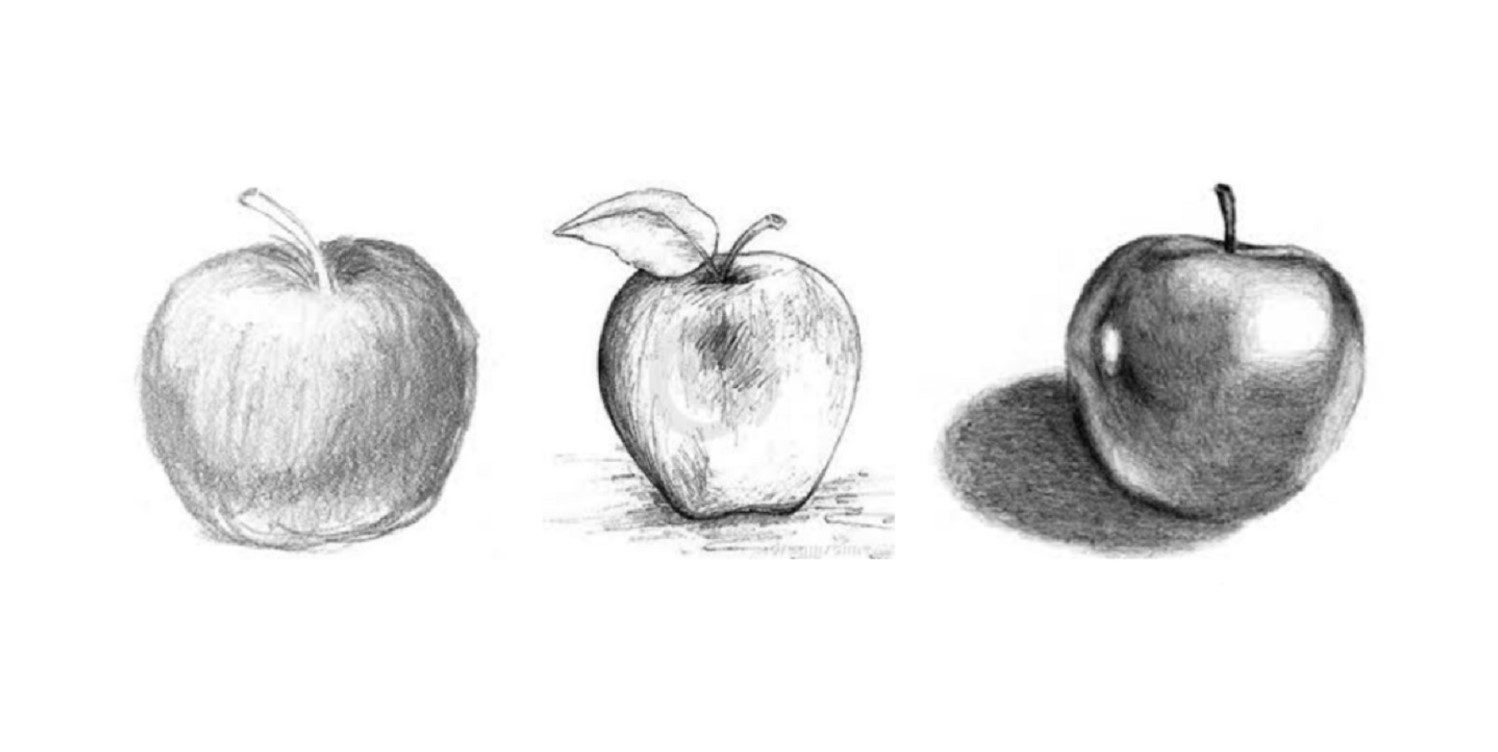

Let me explain what a representation is with a simple example: an apple. The machine needs to find a set of numbers (a vector) that properly represents what makes an apple an apple: its texture, color, taste, smell, shape, etc. Moreover, the representation should reflect different contexts where the concept apple appears: it shouldn’t appear in the space (not often, hopefully), although it is common to observe an apple hanging from a tree. This generic representation of the apple can later be used to create new apples with different brightness, shadows and so on -each particular apple will be a realization of the abstract representation of the concept apple-. Or to paint a still life that contains a beautiful and shiny apple at the centre. As we said above, the task doesn’t matter for this discussion; what is important is that the machine needs a proper representation of the concept apple.

As some of you might be wondering at this point, can all apples be represented by a single vector? Certainly not. We need a complete set of vectors, that will be most likely similar -for some definition of similarity, that I leave for another post-. The (Riemannian) space where these apple vectors live in is a mathematical structure known as a manifold (see subsection 5.11.3 in the Deep Learning book and the excellent post of Christopher Olah). Thus, the task of any representation learning technique is to approximate such manifolds.

Deep Neural Networks

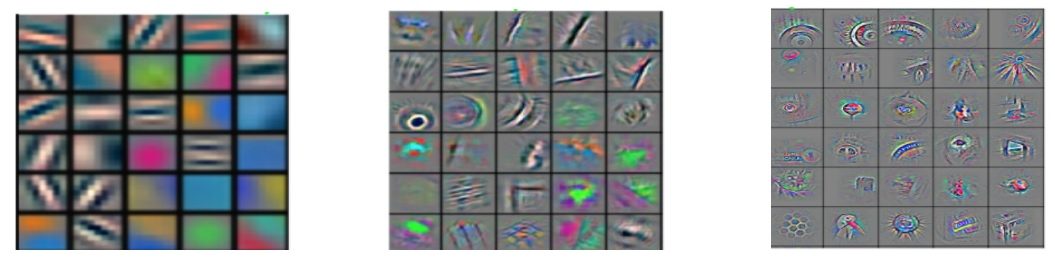

So, what about Deep Learning? Well, DL is nothing else but a (powerful) technique for learning representations from data, in a hierarchical and compositional way. Deep Neural Networks (DNN) learn representations of an input object by learning abstractions of increasing complexity, that can be composed to generate higher level concepts, and finally, a proper abstraction of the original object. Hence, it is hierarchical (from lower to higher abstractions) and compositional (low level abstractions give rise to higher level ones). This is shown schematically in the figure above: the complexity of the representation increases with depth (from left to right in the figure above). Please, notice that deeper layers representations (higher layer abstractions) are composed from previous layers (lower level abstractions) as the information passes through the network (see, for instance, this animated gif that exemplifies information flow in image classification with TensorFlow).

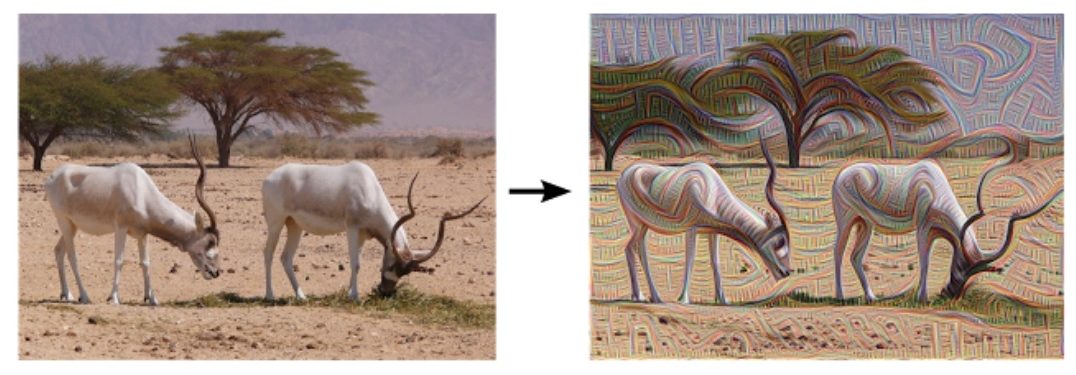

Although DNNs alone have been successfully applied in several fields such as image classification, speech recognition, etc., it is important to realize that their main contribution in those cases was the creation of a proper representation. For instance, in the case of a DNN performing image classification, the network learns simultaneously a classificator and a representation. The learnt representation can be used then for finding similar images. Or it could be used to generate new ones. In the image on the left, from Erik Bernhardsson’s blog, a DNN was trained on a dataset consisting of 50k different fonts. Completely new fonts are created by sampling from the obtained representation; here, an interpolation between some of these fonts is shown. There are several nice animated gifts on that blog, that further demonstrate the potential of proper representation learning. One could even go further with DNN representations and let the network hallucinate a little bit, see the figure below.

Another paradigmatic example is the case of DeepMind and the ancient game of Go. For the mastering of this game by an AI, Reinforcement Learning (RL) techniques were applied to prevent the use of a full Monte-Carlo tree-search. Here, DNNs provided RL with a proper representation of the full game board -technically speaking, of the policy and value functions given board states and player actions-. You can find an easy-to-follow explanation of DeepMind’s AlphaGo algorithm here.

Next steps

Coming back to the definition of DL, are the hierarchical and compositional aspects the whole story? Well, not really. One thing that, in my opinion, has made DNNs so successful is the incorporation of symmetries. Symmetries have allowed DNNs to overcome the curse of dimensionality and, in turn, being able to select a small family of functions from the infinite set of possible ones. In other words, they have allowed computers to learn representations from data efficiently and effectively . But this is another -fascinating, though- story. I will leave the discussion on the curse of dimensionality, and the importance of incorporating symmetries while learning, for another post.

While we work on it, you might want to have a look at the work of Stephane Mallat and Joan Bruna on Scattering Convolutional Networks, that provides a mathematical framework for understanding Deep Convolutional Nets, and the recent work on the connection between Deep Learning and Physics by Lin, Tegmark and Rolnick.

Hopefully after this reading, you will get a clearer view of what Representation Learning means, and how Deep Learning fits into this discipline.