The Routing agent: a key component for building agentic AI assistants at scale

Since the emergence and widespread use of large language models (LLM) three years ago, one of their most obvious applications has been to improve the capabilities of digital assistants, both within companies and in customer service. This potential was also quickly identified in banking.

This led to the emergence of the first assistants capable of providing relevant information to financial advisors and managers, facilitating the daily work of these professionals in their relationship with customers. In addition, these LLM-based assistants no longer required writing with keywords or preconfigured options, but understood natural language, which significantly increased their usefulness.

Today, beyond responding with accurate and up-to-date information, AI assistants can also perform operations on behalf of the user. This is the revolution of agentic AI, a technology that we are already applying at BBVA.

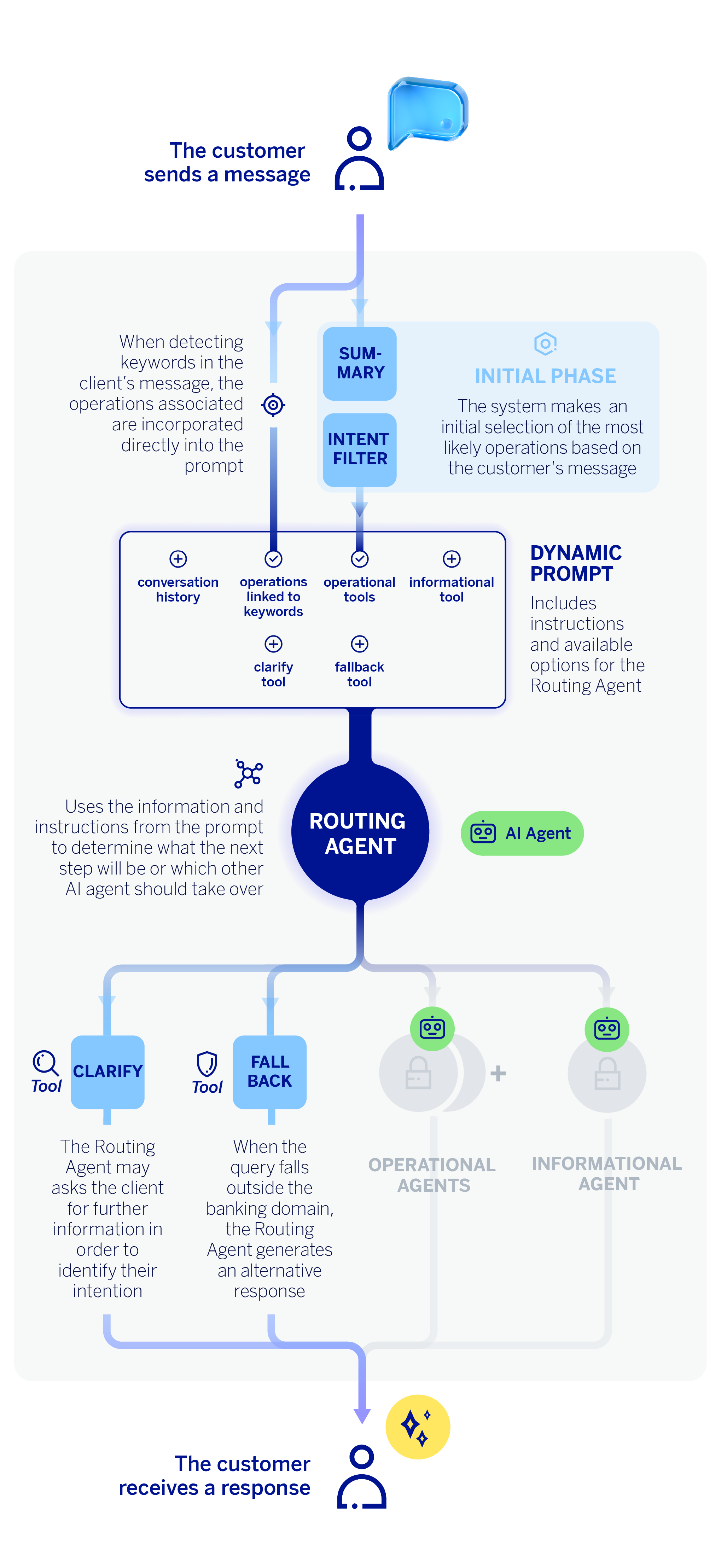

In this article, we present our work implementing a conversational customer service assistant that not only understands the user, responds with relevant information, and follows the thread of the conversation, but also carries out basic banking operations from start to finish. This is possible thanks to an architecture based on intelligent agents: specialized autonomous components that collaborate from understanding the user’s intention, that is, the purpose or objective that a user seeks to achieve through their message or query, to the execution of a specific operation. To begin with, we will focus on the main actor in our system, an AI agent responsible for identifying the customer’s or user’s need and deciding how to resolve it. This is the classification agent or Routing agent.

The main role of the Routing agent

The conversation between the machine and the customer begins with identifying their intention. What does the customer want to obtain? Are they seeking information, or do they want to carry out a banking operation? This distinction is important for the Routing agent, as, depending on the case, they will have to resort to different AI agents.

On the one hand, the available operations will be executed by agents specialized in each task. A banking operation is any process that requires access to specific customer information. Making an instant mobile payment or checking the available balance in an account are examples of operations.

On the other hand, requests for information will be answered by the informational agent, which is built using a RAG (Retrieval Augmented Generation) architecture. We understand a request for information to be a generic query that can be answered in the same way for all customers.

What makes this system different is its contextual reasoning. It does not just search for keywords, but understands the real meaning of the query, considers the conversation history, and assesses the subtleties of natural language. In addition to differentiating between the execution of multiple banking operations and an informational query, another of its most innovative features is the ability to detect when more information needs to be requested from the customer. Instead of guessing or giving an incorrect answer, the assistant can initiate a dialogue to clarify the customer’s intention (clarification or disambiguation function). For example, imagine a customer who types “contract.” Our agent is unable to identify what the customer wants to contract, so it responds with a clarification query, such as: “Sure, I’m here to help. Would you like to sign up for an account, a card, or another banking product?”

In short, the Routing agent uses a large language model (LLM) that acts as a virtual banking expert capable of analyzing the entire context of the conversation. It functions as our assistant’s “decision center,” identifying and classifying the customer’s request into three main categories: execute an operation —and in that case, determine the specific operation—; respond to a query with general information, or request more information from the customer.

The Routing agent also has descriptions of the possible operations to be executed, which in turn are obtained from a prior filtering process. In other words, the agent does not choose from all possible operations, but from a pre-selected subset. Asking a large language model (LLM) to select a label from a small set of 5 or 6 options is considerably more efficient than asking it to do so from more than 150 operations simultaneously. In addition to the limitations inherent in prompts, the paradox of choice remains: making decisions is easier when the number of options is small. The objective of this preliminary phase is to reduce the number of possible operations to choose from, gaining efficiency and scalability.

The technology behind the Routing agent

From a technical standpoint, this decision engine —the Routing agent— uses the most advanced GPT (OpenAI) models. The system employs a tool-based architecture, which represents an innovative approach to conversational system design. In addition, the agent has access to the conversation history, as well as a set of instructions in the form of a dynamic prompt. These instructions provide guidance to the Routing agent on how to operate.

When we talk about a tool-based architecture, we mean that each banking operation is modeled as a specific tool that the LLM can invoke, using the function calling pattern. The tools are implemented as Python classes, whose technical descriptions —that is, the descriptions of the operations— are loaded dynamically. Thanks to this, we can carry out A/B testing between different versions of descriptions without modifying code.

In addition to the tools associated with operations, the Routing agent can use other tools: one to redirect the query to the information agent, another to request clarification from the customer if necessary, and, finally, another to refer to fallback —that is, when the agent determines that they cannot answer because the response is outside the scope of banking—.

In this sense, we can understand the fallback mechanism as a security layer or guardrail built into the system. For example, asking our conversational assistant what the weather will be like in Paris next Sunday is not related to banking matters. Consequently, the assistant will send a predetermined response. This example does not carry a high risk, but as a general practice, we want to prevent the assistant from responding to queries in areas where we are not experts.

To respond to fallback or clarification cases, the Routing agent does not need to make another call to an LLM; instead, responses are generated using a parameter from the tool itself that the agent invokes. This parameter incorporates a description that defines how the response should be in each case, and is also complemented by other parts of the dynamic prompt. In this way, the message is generated in the same Routing process.

Closing remarks: Impact of an intelligent classification system on the conversational experience

The first objective of a conversational assistant when receiving a query is simply to understand what the customer wants to do clearly. This process is commonly known as triage or routing. In this article, we have taken an in-depth look at the AI agent responsible for carrying out this task, an LLM capable of processing the conversation and identifying whether the customer wants to perform a specific operation or obtain information on any banking-related topic.

Implementing an AI agent capable of classifying the user’s intention and then directing the task to the appropriate agent significantly improves the experience with a conversational assistant. This architecture, based on AI agents, overcomes the limitations of early generative AI-based assistants by incorporating new capabilities and adapting more effectively to the context and situation of each user.